Any comments, suggestions or just looking for a chat about this subject? Don't hesitate and leave a comment on our improved comment section down below the article!

By Matt Williams

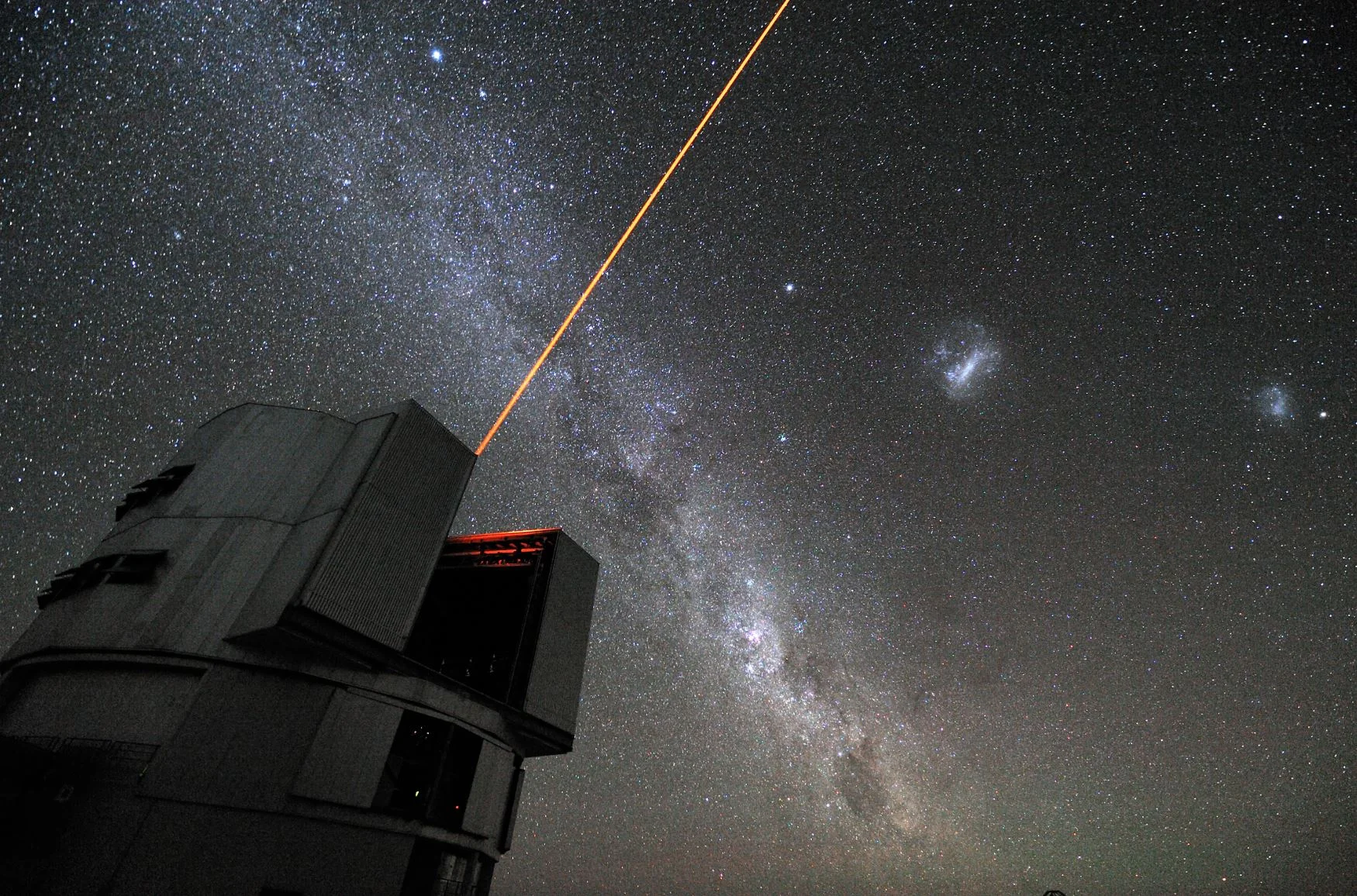

Image Credit: G. Hüdepohl/ESO via Wikimedia Commons

Telescopes have come a long way in the past few centuries. From the comparatively modest devices built by astronomers like Galileo Galilei and Johannes Kepler, telescopes have evolved to become massive instruments that require an entire facility to house them and a full crew and network of computers to run them. And in the coming years, much larger observatories will be constructed that can do even more.

Unfortunately, this trend towards larger and larger instruments has many drawbacks. For starters, increasingly large observatories require either increasingly large mirrors or many telescopes working together – both of which are expensive prospects. Luckily, a team from MIT has proposed combining interferometry with quantum-teleportation, which could significantly increase the resolution of arrays without relying on larger mirrors.

To put it simply, interferometry is a process where light is obtained by multiple smaller telescopes and then combined to reconstruct images of what they observed. This process is used by such facilities as the Very Large Telescope Interferometer (VLTI) in Chile and the Center for High-Angular Resolution Astronomy (CHARA) in California.

The former relies on four 8.2 m (27 ft) main mirrors and four movable 1.8 m (5.9 ft) auxiliary telescopes – which gives it a resolution equivalent to a 140 m (460 ft) mirror – while the latter relies on six one-meter telescope, which gives it a resolution equivalent to a 330-m (1083 ft) mirror. In short, interferometry allows telescope arrays to produce images of a higher-resolution than would otherwise be possible.

One of the drawbacks is that photons are inevitably lost during the transmission process. As a result, arrays like the VLTI and CHARA can only be used to view bright stars, and building larger arrays to compensate for this once again raises the issue of costs. As Johannes Borregaard – a postdoctoral fellow at the University of Copenhagen’s Center for Mathematics of Quantum Theory (QMATH) and a co-author on the paper – told Universe Today via email:

“One challenge of astronomical imaging is to get good resolution. The resolution is a measure of how small the features are that you can image and it is ultimately set by the ratio between the wavelength of the light you are collecting and the size of your apparatus (Rayleigh limit). Telescope arrays function as one giant apparatus and the larger you make the array the better resolution you get.”

But of course, this comes at a very high cost. For example, the Extremely Large Telescope, which is currently being built in the Atacama Desert in Chile, will be the largest optical and near-infrared telescope in the world. When first proposed in 2012, the ESO indicated that the project would cost around 1 billion Euros ($1.12 billion) based on 2012 prices. Adjusted for inflation, that works out to $1.23 billion in 2018, and roughly $1.47 billion (assuming an inflation rate of 3%) by 2024 when construction is scheduled to be completed.

“Furthermore, astronomical sources are often not very bright in the optical regime,” Borregaard added. “While there exist a number of classical stabilization techniques to tackle the former, the latter poses a fundamental problem for how telescope arrays are normally operated. The standard technique of locally recording the light at each telescope results in too much noise to work for weak light sources. As a result, all current optical telescope arrays work by combining the light from different telescopes directly at a single measurement station. The price to pay is attenuation of the light in transmission to the measurement station. This loss is a severe limitation for constructing very large telescope arrays in the optical regime (current optical arrays have sizes of max. ~300 m) and will ultimately limit the resolution once effective stabilization techniques are in place.”

To this, the Harvard team – led by Emil Khabiboulline, a graduate student at Harvard’s Department of Physics – suggests relying on quantum teleportation. In quantum physics, teleportation describes the process where properties of particles are transported from one location to another via quantum entanglement. This, as Borregard explains, would allow for images to be created without the losses encountered with normal interferometers:

“One key observation is that entanglement, a property of quantum mechanics, allows us to send a quantum state from one location to another without physically transmitting it, in a process called quantum teleportation. Here, the light from the telescopes can be “teleported” to the measurement station, thereby circumventing all transmission loss. This technique would in principle allow for arbitrary sized arrays assuming other challenges such as stabilization are dealt with.”

When used for the sake of quantum-assisted telescopes, the idea would be to create a constant stream of entangled pairs. While one of the paired particles would reside at the telescope, the other would travel to the central interferometer. When a photon arrives from a distant star, it will interact with one of this pair and be immediately teleported to the interferometer to create an image.

Using this method, images can be created with the losses encountered with normal interferometers. The idea was first suggested in 2011 by Dr. Mankei Tsang of the National University of Singapore and University of New Mexico’s Center for Quantum Information and Control (CQuIC). At the time, Tsang and other researchers understood that the concept would need to generate an entangled pair for each incoming photon, which is on the order of trillions of pairs per second.

This was simply not possible using then-current technology; but thanks to recent developments in quantum computing and storage, it may now be possible. As Borregaard indicated:

“[W]e outline how the light can be compressed into small quantum memories that preserve the quantum information. Such quantum memories could consist of atoms that interact with the light. Techniques for transferring the quantum state of a light pulse into an atom have already been demonstrated a number of times in experiments. As a result of compression into memory, we use up significantly fewer entangled pairs compared to memoryless schemes such as the one by Gottesman et al. For example, for a star of magnitude 10 and measurement bandwidth of 10 GHz, our scheme requires ~200 kHz of entanglement rate using a 20-qubit memory instead of the 10 GHz before. Such specifications are feasible with current technology and fainter stars would result in even bigger savings with only slightly larger memories.”

This method could lead to some entirely new opportunities when it comes to astronomical imaging. For one, it will dramatically increase the resolution of images, and perhaps make it possible for arrays to achieve resolutions that are equivalent to that of a 30 km mirror. In addition, it could allow astronomers to detect and study exoplanets using the direct imaging technique with resolutions down to the micro-arsecond level.

“The current record is around milli-arcseconds,” said Borregaard. “Such an increase in resolution will allow astronomers to access a number of novel astronomical frontiers ranging from determining characteristics of planetary systems to studying cepheids and interacting binaries… Of interest to astronomical telescope designers, our scheme would be well-suited for implementation in space, where stabilization is less of an issue. A space-based optical telescope on the scale of 10^4 kilometers would be very powerful, indeed.”

In the coming decades, many next-generation space and ground-based observatories are set to be built or deployed. Already, these instruments are expected to offer greatly increased resolution and capability. With the addition of quantum-assisted technology, these observatories might even be able to resolve the mysteries of dark matter and dark energy, and study extra-solar planets in amazing detail.

The team’s study, “Quantum-Assisted Telescope Arrays“, recently appeared online. In addition to Khabiboulline and Borregaard, the study was co-authored by Kristiaan De Greve (a Harvard postdoctoral fellow) and Mikhail Lukin – a Harvard Professor of Physics and the head of the Lukin Group at Harvard’s Quantum Optics Laboratory.

Source: Universe Today - Further Reading: MIT Technology Review, arXiv

If you enjoy our selection of content please consider following Universal-Sci on social media: