Comments and suggestions are welcome! Don't hesitate and leave a comment on our comment section down below the article!

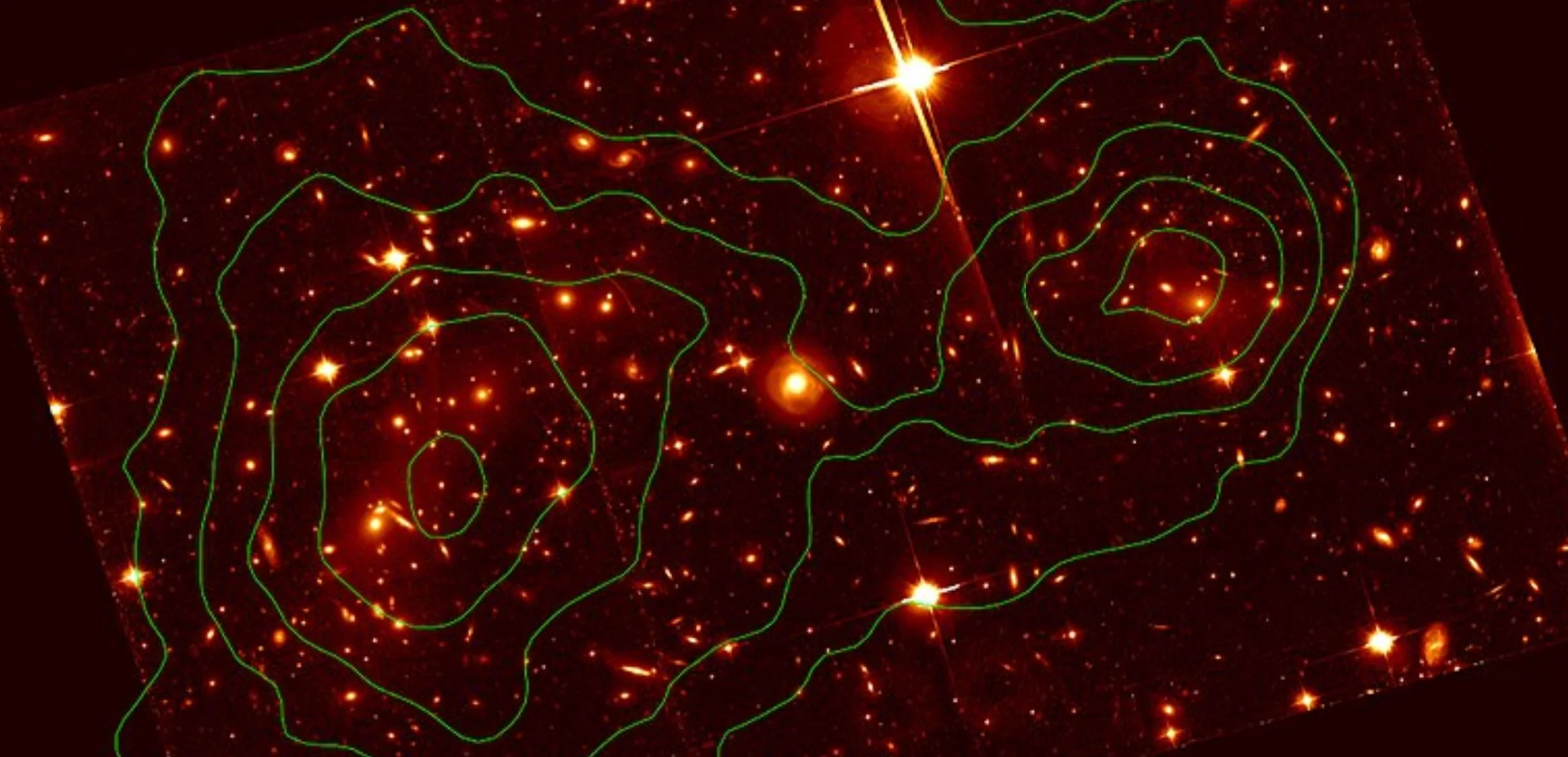

A typical computer-generated dark matter map used by the researchers to train their neural network. - Image Credits: ETH Zurich

When trying to understand the history and future of our universe, we can't rely only on what our eyes see when we look up at the night sky. Dark matter and dark energy make up approximately 95% of the Universe. These two, relatively unknown, factors form a large piece of the puzzle. Dark energy is the driving force behind the accelerated expansion of the cosmos, while dark matter is keeping galaxies together. Cosmologists (astronomers that concern themselves with the origin and evolution of the universe) need to know precisely how much of these two factors is out there in order to make their models work accurately.

Recently physicists from the Swiss Federal Institute of Technology in Zurich (ETH), have joined forces with the department of computer science to try and improve conventional techniques for estimating percentage of dark matter by use of artificial intelligence. They used leading-edge machine learning algorithms akin to those used for facial recognition on social media platforms.

It seems a little odd to use facial recognition algorithms as the basis for this project. However it has been proven to be useful, although there are no faces to be recognized, the researchers are still looking for something fairly similar. Tomasza Kacprzak, one of the scientists, stated that Facebook uses its algorithms to find mouths, ears, and eyes in photographs while they use their it to look for the tell-tale signs of dark matter and dark energy.

It is not possible to directly observe dark matter with telescopes. Scientists can detect it by observing slight bends in the path of light rays from far away galaxies, generated by the immense gravity of dark matter. This bending of light rays is called weak gravitational lensing.

A gravitational lens mirage - Its multiple properties allow astronomers to determine the mass and dark matter content of the foreground galaxy lenses. - Image Credit: ESA/Hubble & NASA via Wikimedia Commons

Scientists can use gravitational lensing to create mass maps of the sky, indicating the locations of dark matter. After creating such maps, they can compare them to theoretical predictions and see what models produce the most accurate prediction. Ordinarily, these models are created through human-designed statistics. A significant disadvantage of working this way is that it is pretty limited in finding complicated patterns.

To eliminate this disadvantage, the researchers turned to computers. Instead of developing the statistical analysis themselves, artificial intelligence could have a go at it. They used machine learning algorithms, called deep artificial neural networks, that are able to teach themselves.

At first, the researchers trained the neural network by feeding it with data from a simulated universe. As the correct percentage and distribution of dark matter present in this data was already known, they could check if the neural networks were doing a good job with their models. By regularly analyzing a large number of dark matter maps, the neural network ultimately formed itself to discover the correct features from those maps and extract more of the desired data.

Image of the Bullet Cluster from the Hubble Space Telescope with total mass contours (dominated by dark matter) from a lensing analysis overlaid. - Image Credit: Mac_Davis / NASA via Wikimedia Commons

The results of the exercise were exhilarating: the neural networks turned out to be 30% more accurate than conventional (human-made) methods of statistical analysis. All in all, the research has been an enormous success as it would take approximately twice the amount of (costly) observation time to gain comparable accuracy without the use of these neural networks.

Subsequently, the thoroughly trained neural network got to analyze genuine dark matter maps from the KiDS-450 dataset. Janis Fluri, one of the lead authors of the study, stated that it had been the first time this type of machine learning has been used in dark matter research. Cosmologists expect that machine learning will have numerous future applications in their field.

Sources and further reading: Cosmological constraints with deep learning from KiDS-450 weak lensing maps / Machine learning / ETH Zurich press release

If you enjoy our selection of content please consider following Universal-Sci on social media: